Two of the UW Reality Lab Directors each gave a keynote address at CVPR’20, and several of our researchers have papers, posters, and presentations there:

Prof. Ira Kemelmacher-Shlizerman (Director of the UW Reality Lab) gave a keynote at the Human-Centric Image/Video Synthesis Workshop

Prof. Steve Seitz (Co-Director of the UW Reality Lab) gave a keynote at the Fourth Workshop on Computer Vision for AR/VR

Slow Glass: Visualizing History in 3D

(Xuan Luo, Yanmeng Kong, Jason Lawrence, Ricardo Martin Brualla, Steven M. Seitz)

Wouldn’t it be fascinating to be in the same room as Abraham Lincoln, visit Thomas Edison in his laboratory, or step onto the streets of New York a hundred years ago? We explore this thought experiment, by tracing ideas from science fiction through newly available data sources that may facilitate this goal.

Background Matting: The World is Your Green Screen

(Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steve Seitz, Ira Kemelmacher-Shlizerman)

This project was used by Microsoft’s Build Conference, and Microsoft CEO Satya Nadella talked about it in this Fireside Chat:

“The next area, Harry, that we’re all so excited about in this world of remote everything, is Background Matting …we recently had the Build Developer Conference and we were able to take presenters who just recorded themselves at home, and then we were able in fact to superimpose them in a virtual stage without needing that Green Screen. …Again, breakthroughs in computer vision that, in fact, we worked with the University of Washington on. So let’s roll the video.”

– Satya Nadella

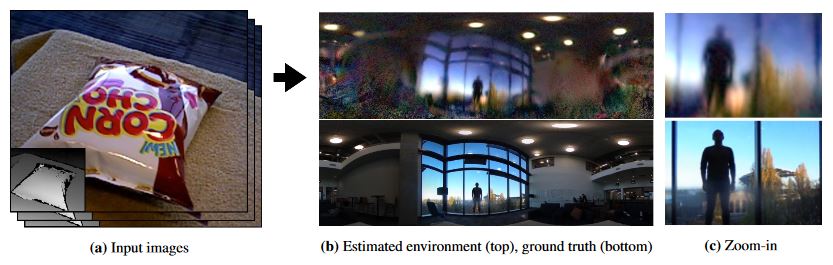

Seeing the World in a Bag of Chips

(Jeong Joon Park, Aleksander Holynski, Steve Seitz)

We address the dual problems of novel view synthesis and environment reconstruction from hand-held RGBD sensors. Our contributions include 1) modeling highly specular objects, 2) modeling inter-reflections and Fresnel effects, and 3) enabling surface light field reconstruction with the same input needed to reconstruct shape alone. In cases where scene surface has a strong mirror-like material component, we generate highly detailed environment images, revealing room composition, objects, people, buildings, and trees visible through windows. Our approach yields state of the art view synthesis techniques, operates on low dynamic range imagery, and is robust to geometric and calibration errors.

Scene Recomposition by Learning-Based ICP

(Hamid Izadinia, Steve Seitz)

By moving a depth sensor around a room, we compute a 3D CAD model of the environment, capturing the room shape and contents such as chairs, desks, sofas, and tables. Rather than reconstructing geometry, we match, place, and align each object in the scene to thousands of CAD models of objects. In addition to the fully automatic system, the key technical contribution is a novel approach for aligning CAD models to 3D scans, based on deep reinforcement learning. This approach, which we call Learning-based ICP, outperforms prior ICP methods in the literature, by learning the best points to match and conditioning on object viewpoint. LICP learns to align using only synthetic data and does not require ground truth annotation of object pose or keypoint pair matching in real scene scans. While LICP is trained on synthetic data and without 3D real scene annotations, it outperforms both learned local deep feature matching and geometric based alignment methods in real scenes. The proposed method is evaluated on real scenes datasets of SceneNN and ScanNet as well as synthetic scenes of SUNCG. High quality results are demonstrated on a range of real world scenes, with robustness to clutter, viewpoint, and occlusion.

Latent Fusion: End-to-End Differentiable Reconstruction and Rendering for Unseen Object Pose Estimation

(Keunhong Park, Arsalan Mousavian, Yu Xiang, Dieter Fox)