Our UW Reality Lab project on background matting has been getting attention lately – at Microsoft’s Build Conference, and in this Fireside Chat with Microsoft CEO Satya Nadella:

“The next area, Harry, that we’re all so excited about in this world of remote everything, is Background Matting …we recently had the Build Developer Conference and we were able to take presenters who just recorded themselves at home, and then we were able in fact to superimpose them in a virtual stage without needing that Green Screen. …Again, breakthroughs in computer vision that, in fact, we worked with the University of Washington on. So let’s roll the video.”

– Satya Nadella

David Carmona describes just how important this project was to making the 2020 Build Conference happen during a global pandemic:

“Covid-19 has made it impossible for large scale in-person events, so we are all looking for new ways to communicate with our customers. And again, if you participated in any part of Microsoft Build, then you experienced first-hand how our Microsoft Global Event’s team had to shift everything to virtual.”

– David Carmona

“…So, we built on top of the background matting process by using our Azure Kinect sensors with an AI model based on the work from University of Washington to create a new way for our presenters to easily record themselves from their home and appear on our virtual stage.”

Here’re the project page and some of its results:

Background Matting: The World Is Your Green Screen

(Authors: Soumyadip Sengupta, Vivek Jayaram, Brian Curless, Steve Seitz, Ira Kemelmacher-Shlizerman)

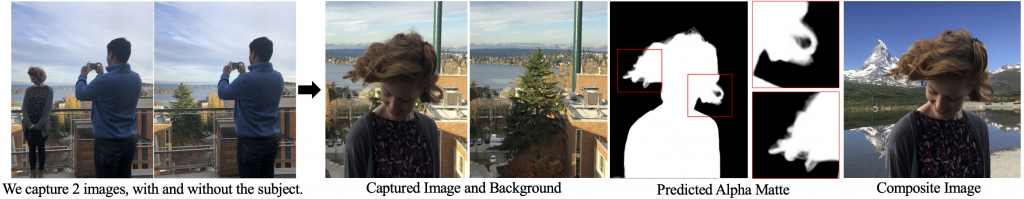

Abstract: We propose a method for creating a matte – the per-pixel foreground color and alpha – of a person by taking photos or videos in an everyday setting with a handheld camera. Most existing matting methods require a green screen background or a manually created trimap to produce a good matte. Automatic, trimap-free methods are appearing, but are not of comparable quality. In our trimap free approach, we ask the user to take an additional photo of the background without the subject at the time of capture. This step requires a small amount of foresight but is far less timeconsuming than creating a trimap. We train a deep network with an adversarial loss to predict the matte. We first train a matting network with supervised loss on ground truth data with synthetic composites. To bridge the domain gap to real imagery with no labeling, we train another matting network guided by the first network and by a discriminator that judges the quality of composites. We demonstrate results on a wide variety of photos and videos and show significant improvement over the state of the art.